# Azure Document Generation Architecture with AI

This Terraform configuration deploys a comprehensive Azure architecture for an AI-powered document generation system. The solution enables organizations to create intelligent structured and unstructured documents grounded in their enterprise data using Azure AI services.

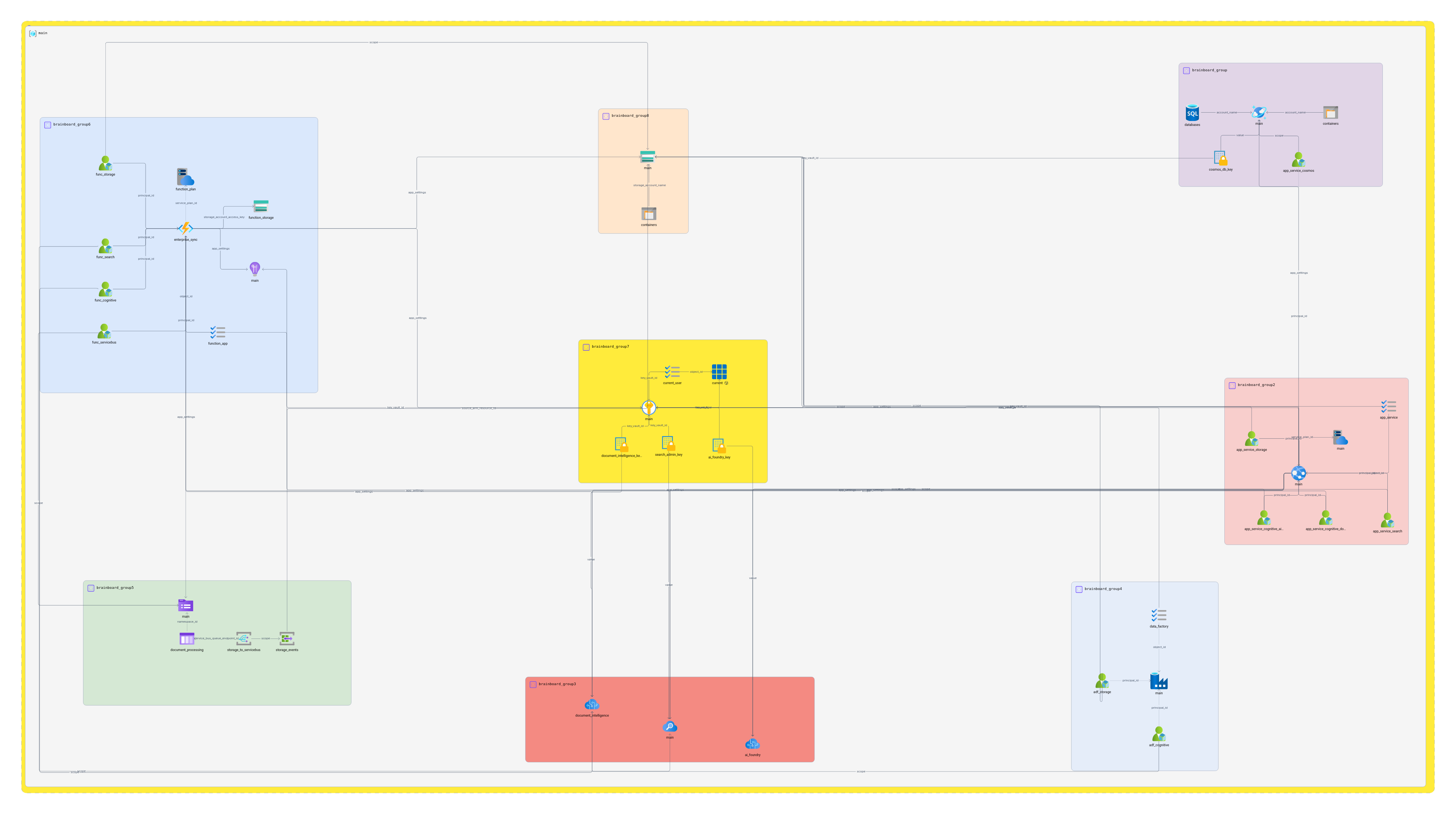

## Architecture Overview

The solution combines retrieval, summarization, and generation with document persistence to support faster document creation workflows. Users can interact through natural language to generate documents that incorporate organizational knowledge.

### Key Components

- **Azure App Service**: Hosts the web frontend for user interactions

- **Azure Storage Account**: Stores enterprise documents and generated content

- **Azure Data Factory**: Orchestrates enterprise data sync processes

- **Azure Function App**: Processes document ingestion and sync events

- **Azure Service Bus**: Handles messaging for document processing workflows

- **Azure Event Grid**: Triggers processing when new documents are uploaded

- **Azure AI Document Intelligence**: Processes and indexes documents from storage

- **Azure AI Search**: Provides searchable indexes for semantic search capabilities

- **Azure AI Foundry**: Delivers AI models as a service for chat completion and document generation

- **Azure Cosmos DB**: Stores conversation history and user interactions

- **Azure Key Vault**: Securely manages secrets and connection strings

- **Application Insights**: Provides monitoring and diagnostics

## Prerequisites

### Required Tools

- **Azure CLI** configured and authenticated (`az login`)

- **Terraform** installed (>= 1.2)

- **Azure subscription** with appropriate permissions

### Required Permissions

- Contributor access to the target Azure subscription

- Ability to create service principals and assign roles

- Access to create Key Vault and manage access policies

### Optional Setup

- Configure Terraform backend for state management (uncomment backend block in `provider.tf`)

## Project Structure

```

├── provider.tf # Terraform and Azure provider configuration

├── locals.tf # Local values and naming conventions

├── variables.tf # Input variable definitions

├── terraform.tfvars # Variable values (customize for your environment)

├── main.tf # Core Azure resources and configurations

└── README.md # This documentation

```

## Usage

### 1. Clone and Configure

```bash

# Clone or download the Terraform files

# Customize terraform.tfvars with your specific values

```

### 2. Initialize Terraform

```bash

terraform init

```

### 3. Review the Plan

```bash

terraform plan -var-file="terraform.tfvars"

```

### 4. Deploy the Infrastructure

```bash

terraform apply -var-file="terraform.tfvars"

```

### 5. Destroy (when needed)

```bash

terraform destroy -var-file="terraform.tfvars"

```

## Configuration

### Key Variables to Customize

| Variable | Description | Default |

|----------|-------------|---------|

| `project_name` | Name prefix for all resources | `docgen` |

| `environment` | Environment identifier | `dev` |

| `location` | Azure region | `East US` |

| `app_service_plan_sku` | App Service Plan size | `B2` |

| `ai_search_sku` | Azure AI Search tier | `standard` |

| `cosmos_db_consistency_level` | Cosmos DB consistency | `Session` |

### Storage Containers

The solution automatically creates the following storage containers:

- `enterprise-documents`: Stores source documents for processing

- `generated-documents`: Stores AI-generated documents

- `document-templates`: Stores document templates

### Cosmos DB Structure

- **Database**: `DocumentGenerationDB`

- **Containers**:

- `Conversations`: Stores chat interactions (partitioned by `/conversationId`)

- `UserInteractions`: Stores user activity (partitioned by `/userId`)

- `GeneratedDocuments`: Stores document metadata (partitioned by `/documentId`)

## Resource Connections and Dependencies

### Data Flow Architecture

1. **Enterprise Data Ingestion**

- **Automated Sync**: Azure Data Factory orchestrates scheduled data ingestion from enterprise systems

- **Manual Upload**: Documents uploaded directly to `enterprise-documents` container in Storage Account

- **Event-Driven Processing**: Event Grid triggers Function App when new documents arrive

- **Message Queue**: Service Bus queues document processing tasks for reliable handling

2. **Document Processing Pipeline**

- Function App receives blob creation events via Service Bus

- Azure AI Document Intelligence processes and extracts content from documents

- Processed content indexed in Azure AI Search for semantic search capabilities

- Document metadata stored in Cosmos DB for tracking and retrieval

3. **AI Processing Pipeline**

- App Service receives user requests through web frontend

- Azure AI Foundry provides language models for document generation

- Conversation context maintained in Cosmos DB

4. **Document Generation Workflow**

- User interactions trigger searches against indexed enterprise data

- AI models generate contextual documents based on retrieved information

- Generated documents stored in `generated-documents` container

## Enterprise Data Sync Process

### Automated File Ingestion

The solution includes a comprehensive enterprise data sync system that handles:

**Azure Data Factory Pipelines**:

- Scheduled data ingestion from enterprise file systems, databases, and APIs

- Support for various data sources (on-premises, cloud, hybrid)

- Data transformation and cleansing during ingestion

- Error handling and retry mechanisms

**Event-Driven Processing**:

- Event Grid monitors storage account for new document uploads

- Service Bus ensures reliable message delivery for processing tasks

- Function App processes documents asynchronously

- Automatic indexing and content extraction

**Function App Capabilities**:

- **Blob Trigger**: Automatically processes new documents uploaded to storage

- **Service Bus Trigger**: Handles queued document processing tasks

- **Timer Trigger**: Performs scheduled sync operations

- **HTTP Trigger**: Supports manual sync operations via API calls

### Supported Enterprise Integration Patterns

1. **File System Integration**:

```bash

# Example: Sync from on-premises file share

# Data Factory can copy files from SMB/NFS shares

```

2. **Database Integration**:

```bash

# Example: Extract documents from SQL Server, Oracle, etc.

# Data Factory supports 90+ data connectors

```

3. **API Integration**:

```bash

# Example: Pull documents from REST APIs, SharePoint, etc.

# Custom activities in Data Factory for complex integrations

```

4. **Real-time Integration**:

```bash

# Example: Process documents as soon as they're created

# Event Grid + Function App for immediate processing

```

### Key Resource Relationships

- **App Service → App Service Plan**: Uses `service_plan_id` reference

- **App Service → Storage**: Connected via connection string and managed identity

- **App Service → Cosmos DB**: Connected via endpoint and managed identity with RBAC

- **App Service → AI Services**: Connected via endpoints and managed identity with RBAC

- **App Service → Key Vault**: Managed identity with secret read permissions

- **Data Factory → Storage**: Managed identity with Storage Blob Data Contributor role

- **Data Factory → AI Document Intelligence**: Managed identity with Cognitive Services User role

- **Function App → Storage**: Managed identity with Storage Blob Data Contributor role

- **Function App → Service Bus**: Managed identity with Service Bus Data Receiver role

- **Function App → AI Search**: Managed identity with Search Index Data Contributor role

- **Event Grid → Service Bus**: Routes storage events to processing queue

- **Storage → AI Document Intelligence**: Processes documents from storage containers

- **AI Search → Storage**: Indexes processed document content

- **All Services → Application Insights**: Integrated monitoring and diagnostics

### Security and Access Control

- **Managed Identity**: App Service uses system-assigned managed identity

- **RBAC Assignments**:

- Storage Blob Data Contributor (App Service → Storage)

- Cosmos DB Built-in Data Contributor (App Service → Cosmos DB)

- Search Index Data Contributor (App Service → AI Search)

- Cognitive Services User (App Service → AI services)

- **Key Vault**: Stores sensitive connection strings and API keys

- **Network Security**: HTTPS enforcement, minimum TLS 1.2

## Post-Deployment Configuration

### 1. Configure Enterprise Data Sync

**Set up Data Factory Pipelines**:

```bash

# Create linked services for your enterprise data sources

# Configure pipelines for scheduled data ingestion

# Set up triggers for automated sync operations

```

**Deploy Function App Code**:

```bash

# Deploy document processing functions

# Configure blob triggers for automatic processing

# Set up Service Bus triggers for queued operations

```

### 2. Upload Enterprise Documents

**Manual Upload**:

```bash

# Upload your enterprise documents to the enterprise-documents container

az storage blob upload-batch \

--destination enterprise-documents \

--source ./your-documents \

--account-name

```

**Automated Upload via Data Factory**:

- Configure source connections in Data Factory

- Create copy activities to move files to storage

- Set up schedules for regular sync operations

### 3. Configure AI Search Index

- Set up search index schema based on your document structure

- Configure indexers to process documents from storage

- Define skillsets for content extraction and enrichment

### 3. Deploy Application Code

- Deploy your web application to the App Service

- Configure environment variables and connection strings

- Test document generation workflows

## Monitoring and Troubleshooting

### Application Insights Integration

- All services integrated with Application Insights

- Monitor API calls, performance metrics, and errors

- Set up alerts for critical thresholds

### Key Metrics to Monitor

- App Service response times and error rates

- Cosmos DB request units and throttling

- AI Search query latency and success rates

- Storage account transaction metrics

- **Data Factory pipeline execution status and duration**

- **Function App execution metrics and error rates**

- **Service Bus message processing rates and dead letter counts**

- **Event Grid delivery success rates**

### Common Issues

1. **Permission Errors**: Verify managed identity role assignments

2. **Key Vault Access**: Ensure proper access policies are configured

3. **AI Service Quotas**: Monitor usage against service limits

4. **Cosmos DB Throttling**: Adjust throughput based on usage patterns

5. **Data Factory Failures**: Check pipeline logs and linked service connections

6. **Function App Timeouts**: Adjust timeout settings for large document processing

7. **Service Bus Dead Letters**: Monitor and reprocess failed messages

8. **Event Grid Delivery Failures**: Verify endpoint accessibility and permissions

## Cost Optimization

### Estimated Monthly Costs (US East)

- **App Service Plan (B2)**: ~$60/month

- **Storage Account (LRS)**: ~$5-20/month (based on usage)

- **Cosmos DB**: ~$24/month (400 RU/s per container)

- **AI Search (Standard)**: ~$250/month

- **AI Services**: Pay-per-use based on API calls

- **Application Insights**: ~$5-15/month (based on telemetry volume)

### Cost Optimization Tips

1. Use Azure Reserved Instances for predictable workloads

2. Monitor and optimize Cosmos DB throughput

3. Implement lifecycle policies for storage

4. Set up budget alerts and cost analysis

## Support and Resources

- [Azure Architecture Center](https://docs.microsoft.com/en-us/azure/architecture/)

- [Terraform Azure Provider Documentation](https://registry.terraform.io/providers/hashicorp/azurerm/latest/docs)

- [Azure AI Document Intelligence Documentation](https://docs.microsoft.com/en-us/azure/ai-services/document-intelligence/)

- [Azure AI Search Documentation](https://docs.microsoft.com/en-us/azure/search/)

## License

This Terraform configuration is provided as-is for educational and deployment purposes. Ensure compliance with your organization's policies and Azure subscription terms.

Industries

.webp) HealthcareControl environments and simplify operations

HealthcareControl environments and simplify operations.webp) FinancialFragmentation leads to increased costs, inefficiency and risk

FinancialFragmentation leads to increased costs, inefficiency and risk.webp) RetailUnify financial operations to reduce risk and costs

RetailUnify financial operations to reduce risk and costs TelecommunicationSimplify network complexity and accelerate service delivery

TelecommunicationSimplify network complexity and accelerate service delivery GovernmentSecure, compliant, and efficient cloud adoption for the public sector

GovernmentSecure, compliant, and efficient cloud adoption for the public sector

Use cases

.webp) Move to IaCThe easiest way to move to IaC: Brainboard one click migration.

Move to IaCThe easiest way to move to IaC: Brainboard one click migration..webp) Standardize IaCGive your users a reason to follow your guidelines.

Standardize IaCGive your users a reason to follow your guidelines..webp) Self-serve modelBuild your internal service catalog to easily provision on-demand infrastructure.

Self-serve modelBuild your internal service catalog to easily provision on-demand infrastructure..webp) Lower the learning curveYou don’t need to learn everything at once. Learn by doing.

Lower the learning curveYou don’t need to learn everything at once. Learn by doing..webp) Your Disaster Recovery strategySystems fail, all the time. Plan ahead and protect against the unknown today!

Your Disaster Recovery strategySystems fail, all the time. Plan ahead and protect against the unknown today!

Features

- Smart cloud designerThe power of design combined with the flexibility of code

- Terraform & OpenTofu modulesCentral & single source of truth of your private, public and/or community modules.

- GitOps workflowSmoothly connect your cloud infrastructure with your git repository.

- Drift detection and remediationMonitor and maintain control of any drift between your source of truth and your cloud provider.

- Synchronized architecturesEliminate drift between your environments (dev, QA, staging, prod…)

Brainboard is an AI driven platform to visually design and manage cloud infrastructure, collaboratively.

It's the only solution that automatically generates IaC code for any cloud provider, with an embedded CI/CD.